How we blend AI and human expertise

Mind Supernova has a diverse network of experts to perform the LLM evaluation and red teaming to identify risks.

Taxonomy creation

We design tailored taxonomies to match the model's use cases and capabilities. By starting with unique taxonomies for each domain of knowledge, we end up with well-structured and representative datasets.

Outcome: Taxonomy for each unique use case

Data generation

We augment state-of-the-art AI & ML technologies with expert human feedback in sophisticated data pipelines.

Our team has the expertise and experience to:

Outcome: Raw generated dataset

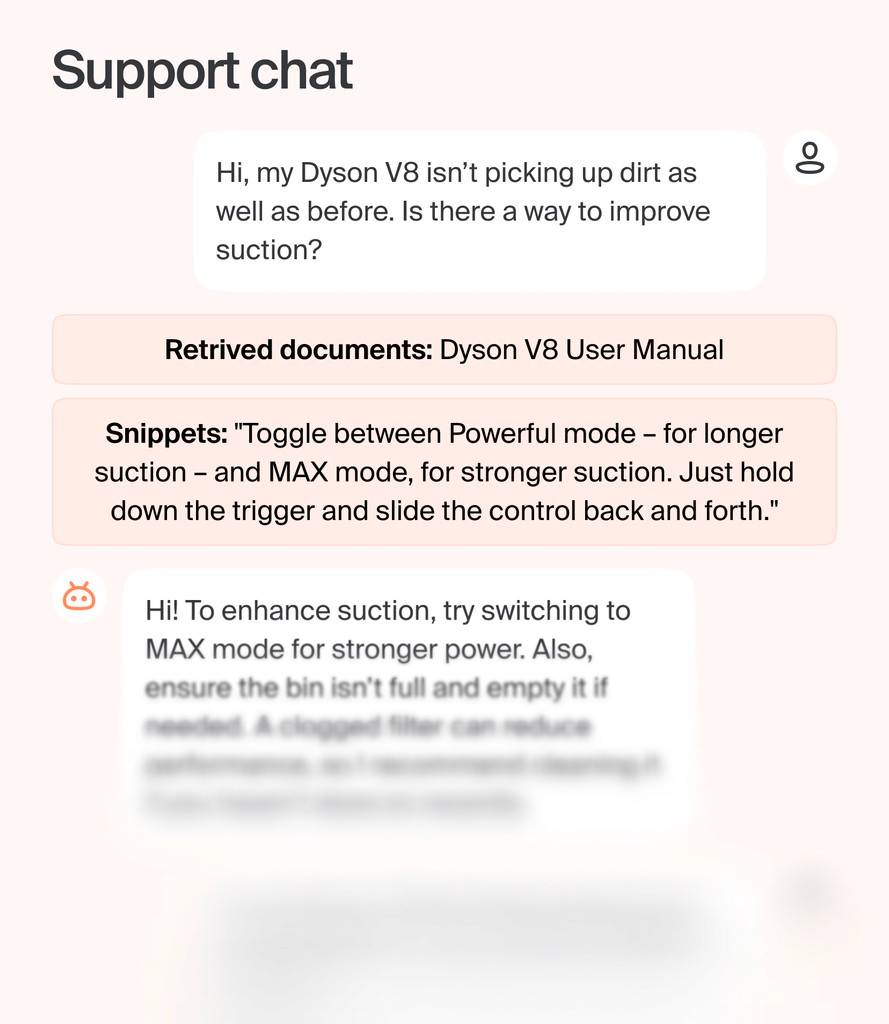

Data verification

Our experts perform comprehensive validations on generated data to curate an accurate and reliable dataset for your model's needs.

Outcome: High quality dataset

Auto-verifiable tasks for Deep Research Agent

Our team has built a dataset to enhance the Deep Research Agent. Each task includes a complex domain-specific prompt and a set of rubrics for automatic answer verification. The agent’s performance on extensive online research tasks was significantly improved through end-to-end RL using this data.

Application: Enhancing Deep Research Agent using end-to-end RL

Synthetic data verification and/or editing

Research conducted by Scale’s Safety, Evaluations, and Analysis Lab will enable model-assisted approaches.

Application: Coding agent for repository maintenance and bug-fixing tasks

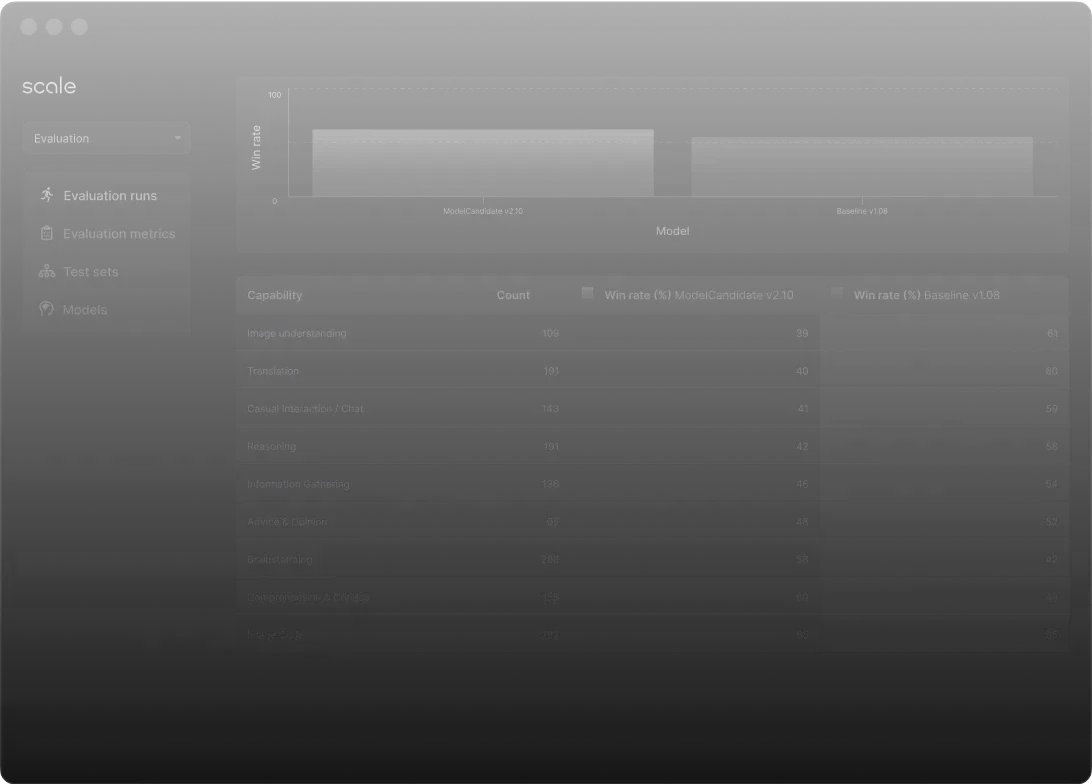

Reliable and Robust Performance Management

Mind Supernova Evaluation is designed to enable frontier model developers to understand, analyze, and iterate on their models by providing detailed breakdowns of LLMs across multiple facets of performance and safety.

Proprietary Evaluation Sets

High-quality evaluation sets across domains and capabilities ensure accurate model assessments without overfitting.

Rater Quality

Expert human raters provide reliable evaluations, backed by transparent metrics and quality assurance mechanisms.

Product Experience

User-friendly interface for analyzing and reporting on model performance across domains, capabilities, and versioning.

Targeted Evaluations

Custom evaluation sets focus on specific model concerns, enabling precise improvements via new training data.

Reporting Consistency

Enables standardized model evaluations for true apples-to-apples comparisons across models.